Awful Marketer, Awesome Assistant: 9+ Ways Quality Absolutists Like Us Use AI

Chris Gillespie | October 20, 2023

When we talk to executives about AI in marketing, their eyes light up about two things—speed and scale. Only, it doesn’t offer those. Not yet. It can do plenty else, which we will get to, but not those two because at the core of this technology lies a paradox:

How do you know if the output is any good?

A data provider client of ours ran into a similar issue in the mid-2010s. They outsourced verifying their data to thousands of “cloud workers” in developing countries who’d confirm people’s phone numbers and addresses. The client loved that this scaled and was cheap. Yet it presented a quality problem: The only way to know if that data was accurate was to … check it themselves. So they set up an adversarial system whereby multiple cloud workers had to compete and agree, which was probabilistically better. Then they added onshore analysts to verify random samples. By the end, their “automation” was very much still a carnival of people. It was the software equivalent of three kids in a trenchcoat.

Companies seeking speed and scale—who are now publishing eruptions of SEO articles or staccato personalized (read: impersonal) email campaigns—are being humbled by that paradox. Infinite creation demands infinite supervision.

And to make it worse, many marketers’ first instinct is to hand the AI tasks that they themselves dislike—which, as the marketer Melissa Bell and Donnique on our team have pointed out, are the ones they tend to be the least skilled at. Professed non-writers assign it writing. Non-math people demand analysis. Non-coders ask it to code. But by definition, they are unqualified to judge its output. Is the writing good? Logic sound? Code clean? They’d be the last to know. They’re not a writing/math/code person.

That said, there are many good ways to apply it, and to judge this technology solely by what it cannot do would have been to judge the first iPhone by its absence of apps. The apps are coming. In this article, we’ll explain the ones available today so your team can skip years of despairing attempts to apply them to tasks still best done by hand.

I wrote this article with substantial input from Deanna Graves, CEO of demand agency DG Marketing, and Grant Grigorian, CEO of the AI analytics startup Moji.

First, what it verifiably cannot do—a warning-filled owner’s manual (followed by the positive stuff)

Think of a large language model (LLMs) as 1,000 eager but otherwise unfeeling interns, who do precisely as told, locked in a room with laptops wired to a blurry simulation of the internet. Some of their versions of the internet are two years outdated. All are missing niche details (try looking up any new startup). All are limited to the information you can glean from Google or your local library.

Generated with Midjourney

Those 1,000 interns do not actually think. They guess the next word. Some, like BERT models, are able to consider a few preceding and following words. But make no mistake—all are improvising, with no sense of where they’ve come from or where they’re going. If they provide winning insight or analysis, it is for the same reason infinite monkeys would eventually write Shakespeare. These interns are copying, pasting, and rephrasing. We cannot delude ourselves by assuming they think or reason, and must remember they are sociopathically focused on trying to guess whatever it is we want to hear. Which invites our confirmation bias.

And they present their answers with unerring confidence supported by no evidence. Below are three quotes attributed to us three authors that we've never actually said.

This is all quite bad news for people who want to outsource their thinking. One client told us their CEO now responds to people’s Slack messages with ChatGPT to save time. How that CEO thinks it will consider the context of the message, the unstated premises, the questions behind the questions, or his relationship to that individual—things humans do quite well but which are not available to LLMs—is beyond us.

Let us put it bluntly. General LLMs do not:

Think or reason except by proxy

Formulate logical arguments except by proxy

Know what is or is not insightful (only what is acceptable)

Have access to all information on the internet (only some; blurry JPEG)

Have specialized knowledge about your buyer (only the training data)

Know your prior experiences

Know the context beyond the prompt

(Most) Understand the history of your interactions with it

(Most) Improve based on an ongoing understanding of you and your buyers

Consider your product and sales cycle

Understand any context beyond the prompt

Write with structure, logic, emotion, or storytelling devices

Adjust for their manifold biases

(Most) Keep your proprietary data secure

The more specialized a model is, say, to a certain use case, the better it becomes at these. The reason ChatGPT’s creators say it cannot perform basic math is because that wasn’t in the dataset. But for those general models, which is most everything commercially available, those 1,000 unfeeling interns are just trying to get you to approve the damn paper, sir. Therein lies the supervision paradox:

How do you know if the output is any good?

At this stage, AI is less of a creator and more of a thought partner and research assistant. Not unlike—and this is not to disparage it—some sort of hyper-intelligent set of tarot cards. I do not believe tarot cards hold supernatural power. But I do feel a strong reaction when I pull a card and it calls me a fool. I grow incensed, disagree, and this interaction stimulates my thinking. Similarly, wrong AI answers can lead to right thinking. (Which by the way, raises questions about the very idea of free will, points out Sarah on the Fenwick team. After all, if AI thoughts are artificial and our own natural, where is that line? Do you really know from whence your own thoughts arise? Don’t they just sort of … happen? But I digress.)

Used as an assistant, general LLMs can be fantastic at:

Critiquing your output

Critiquing its own output (start a new session)

Generating many ideas or headlines to react to

Gathering many concepts for further research

Understanding whatever was in their training data set

Producing an outline to react to

Summarizing vast amounts of data as a starting point

Providing information where you otherwise would have none (in a “something is better than nothing” scenario)

Offering a best-guess interpretation of abstract data

Generating charts and graphs from raw data

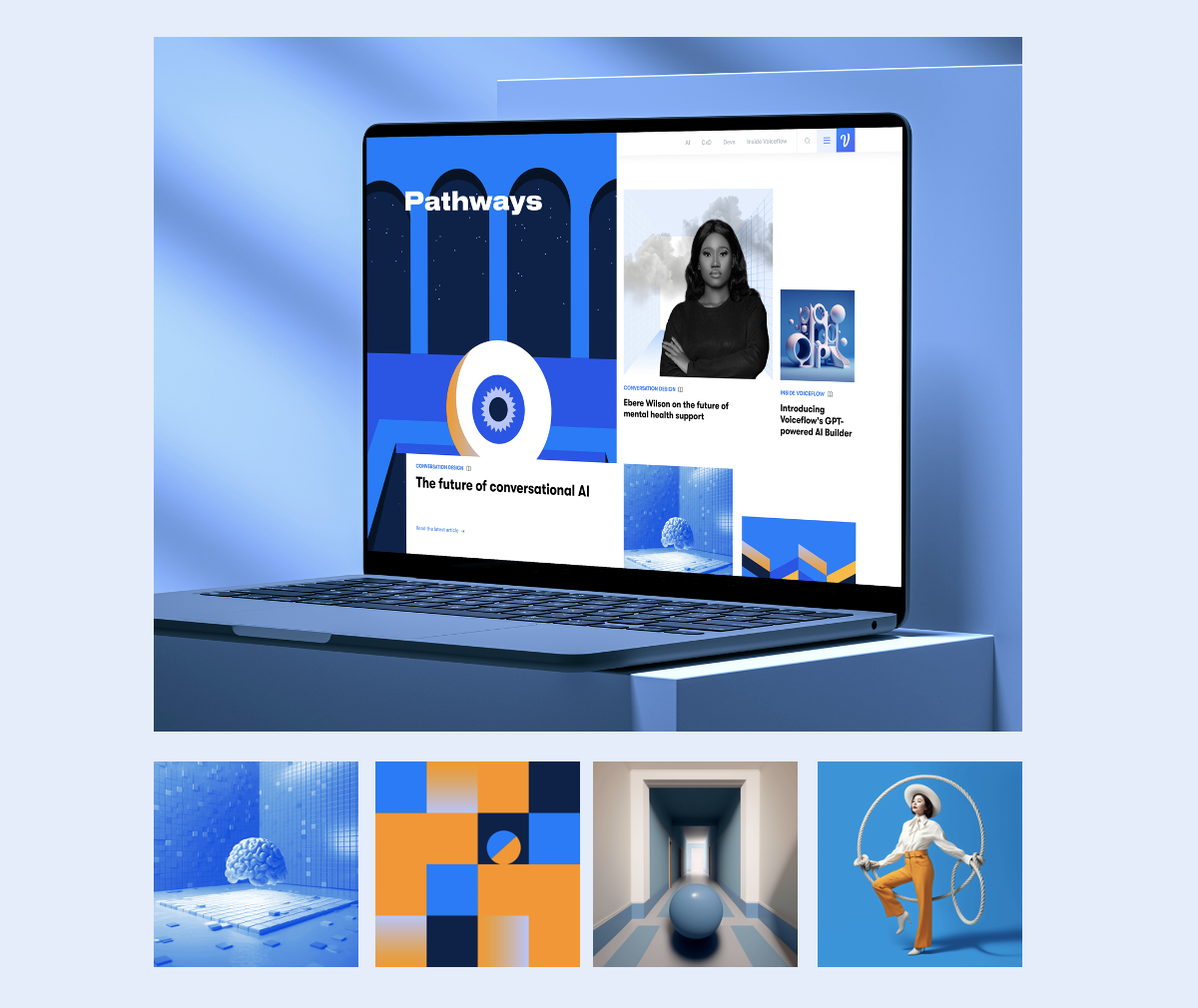

Generating abstract visuals; see some of Pathways’ art

Translating (including into and out of code)

Eliminating dashboards and reports—simply talk to your data

Allowing us to converse more naturally with machines

We believe that the last point may be what history remembers generative AI for. Their writing certainly does not win awards. But they understand English. And not with Siri’s, “Sorry, I didn’t catch that” level of understanding, but actual understanding at a conversational level. This is profound. It is an entirely new interface between people and machines. No longer do we need to peck around in an interface looking for a report or even learn a new software. We can simply talk. Your authors can imagine this leading to even more direct human-to-machine languages that bypass the written and spoken word entirely; for they are but a poor simulacrum of human thought. But again, we digress.

With the above caveats in mind—with the warning labels applied and the motives of your unfeeling interns laid bare—let’s explore ways you can integrate it into your marketing.

9 uses for AI in your marketing

You don’t want it directly interfacing with people (yet) but you do want it interfacing with you, where you can react to, build upon, and research further. For example, to produce Pathways’ art, Fenwick’s designers don’t just accept the first output. It requires someone with a dozen years of experience to prompt, refine, and select a winner. Yet with AI, someone with all that experience can get an astounding amount done.

Below are our current uses across demand marketing and content. We’re keeping this mostly tool- agnostic, but your options include GPT-4, Jasper, Grammarly, and Perplexity. And we will return frequently to update these use cases as we learn more—and provide more specific examples.

We’re currently running tests, so we’ll enrich these with more data and screenshots over the next few weeks.

1. ABM, deal, and funnel data stories

The stories we tell ourselves about buyers tend to be fuzzy. They’re a bit of a Rorschach test for revenue teams—everyone sees something different. That’s because they're always based on speculation and we humans aren’t great at deciphering data. We do excel at asking questions and telling stories, though, and we’re finding that clients can use AI to analyze their ABM and funnel data for those teams to tell stories around.

Some, like ChatGPT-4, can generate charts that don’t exist in Marketo, Engagio, etc. and help you understand campaign performance in new ways. The upside is these are new ways to visualize things. The downside is, they are new and you may have to teach the AI what a bar chart is. (Pictured.)

This is what ChatGPT4 produced when asked to rank Fenwick’s own blog articles by engagement.

How to do it:

Use the ChatGPT-4 code interpreter

Export funnel data as a .CSV

Prep the .CSV for ChatGPT (most of the work)

Upload with a prompt, e.g. “Show me a line graph of this data” or “Explain the trends you see in this data.” The more specificity the better.

2. Red teaming: Challenge your strategy and find flaws

This is the hyper-intelligent tarot card approach: Ask the AI to critique your idea, question your assumptions, and identify things you hadn’t thought of. For example, give it your marketing plan (if it isn’t sensitive) and ask what might go wrong. This beats asking it to generate something from scratch, which is often way off base (AI is good at probabilistic generalities and iteration, not deterministic specifics and creation).

Prompt: “Pretend you are an extremely detail-oriented chief marketing officer with extensive knowledge about marketing technology and digital buyer’s journeys. You want this plan to go flawlessly. What gaps can you find in this plan?

My company: __

My buyer: __

The plan: __”

Pictured below, just a snippet. It was correct that my persona was way underdefined.

3. Understand your buyer

Warning, if you are not uploading sensitive customer data, the AI does not have specialized information on your buyer—only generalized. (Kindo.ai addresses this. Full disclosure: They are a Fenwick client, we’re biased.) And good marketers know that personas often run aground on the rocks of generality, like when GPT4 told me that a buyer “finds books on Amazon.” Remember, you are prompting 1,000 eager but unfeeling interns, not a sagely font of knowledge with deeper wisdom to offer. Sometimes you uncover gems. More often, just scree.

That said, this exercise can provide an interesting foundation and can occasionally surface details that will surprise you. And this is important to stress: They can only surprise you in areas you already have knowledge. You’ll say, “Oh right, I forgot about that conference,” or you’ll check out the print magazine and realize it’s a darn good fit.

If you have an AI service that’s data privacy safe, have it analyze a corpus of valid information, like recorded sales calls, win/loss analyses, and CRM data.

The approach:

Ask for your buyer’s “jobs to be done”

Ask about their concerns

Ask about their potential objections

Ask it to rephrase their problem in their own terms

Ask it to rephrase messaging in their own terms

Ask it where they get their news and information

Ask it where they spend their time

Prompt it to review your materials to generate a “character,” and in that same chat, ask that character questions (from Orbit Media’s winning analysis on AI).

4. Generate asset promo packs

In our experience, everyone will focus so hard on producing a whitepaper that they forget all the derivative promotional assets and programs that’ll actually get people to it. They scramble to make those at the end, and unless they’re having different writers on the team create those (what Fenwick calls the “rule of avoidance”—have someone less close to it tell you what’s most intriguing), they can underwhelm.

AI can help, though it requires very precise prompting: Ask it to break longer assets up into summary emails and LinkedIn posts, with the context of your company’s style (left to their own, posts will be a breathless parade of hyperbole and exclamation points) and the call to action.

It can do pretty serviceable meta text. And it excels at random and zany headlines. They are trope-y. They are not things you would publish without editing. But ask it to Gen Z-ify your subject lines with a 5 out of 5 spice level and you’ll find it surprises you. And certainly gets you thinking outside the usual drip.

5. Generate visuals, customer-facing and otherwise

Once you have a prompt, images take seconds to generate. It takes time to produce those prompts, but the equivalent production work that would have taken weeks can be done in seconds.

There are many caveats. One, achieving a precise prompt requires a designer with enough experience to know what a Freisian style is, for example, and whether it’s the style you want, and if Midjourney has achieved it. Two, these images are off-brand (by definition; they are derivatives) and not copyrightable. Three, they’re very difficult to keep within a style of your choice and consistent. You have to find a style Midjourney knows and can replicate. Which in many cases, is a worse alternative to having an artist create something that genuinely matches your brand.

This is not a replacement. It is a tool for a professional designer to achieve far more. We’re particularly excited for Adobe Firefly and generative autofill.

Use cases to consider:

Blog thumbnails

Email graphics

Ambient interstitials

Campaign-themed profile pictures

Organic social graphics

Paid social graphics

Email signature ads

Art for Pathways, some of it AI-generated.

6. Help generate long-form content

Full disclosure, Fenwick has not applied this in our own work because the technology is not there yet. It is most effectively applied to help junior content teams perform more like mid-level. Many of the benefits, like brainstorming and helping writers overcome blank page paralysis, aren’t useful to experienced writers. Nor does it speed up research, because good writers don’t want an average of all answers available in the training data—they want one good one, clearly staged from a credible source. This means it’s faster to go to Harvard Business Review or Bain.com than trace the AI’s best guesses back to their source.

And for reasons described in the second section above, one cannot edit bad bones to good. The writer must still provide the structure and flow.

All that said, junior team members can use it to:

Expand or improve your interview questions

Brainstorm topics

Suggest ways to turn one article into a series

Identifying topics you haven’t covered

Brainstorm keywords

Generate an outline

Here’s a good use for when you must generate text with AI: Give a tool like Grammarly (the enterprise version) a truth set of five articles written by your best writer, and prompt it with new information and ask it to write it in the style of those five articles.

7. Get everyone writing more like your best writers

Okay this is not an AI use case. But it belongs in this list because honestly, it is my favorite application for assistant software. Writing software like Grammarly allow you to set a style (you’ll have to upload your guide as a .CSV) and then enforce it across the entire company. Michael Tran, a solution engineer there, says this works for three reasons:

It’s working off your company context—It’s searching your style guide, and providing specific links back to where it got that advice from. Thus, hallucinations are fewer and easy to spot.

It helps duplicate your best writers’ writing—If they are part of that “truth set,” and contribute examples to the guide, it helps everyone see how to be like them.

It’s integrated into people’s workflow—Everyone ignores PDF style guides. This makes yours unignorable, and truly useful.

Whereas some companies are firing their writers and instituting the dismal mediocrity that is people who don’t know clear writing using ChatGPT, you can be helping everyone in the company be like your very best.

8. Audit your content

One client of ours spent half a year auditing their 9,000 pieces of content. That individual was so burnt out, they never published the results and resigned shortly after. Few content teams audit their content this exhaustively. Most do not at all. This is a budding use case for AI. We find that the summaries lack insight for obvious reasons (most are simply rephrasing, “Use more customer stories”). Yet they do produce something, which can be better than nothing, and is an area we can imagine them adding far more value once they improve.

The downside of this work today is you must use a .CSV as an aperture, which means you must gather all that content within the .CSV. (No model we are aware of can do the discovery.) Or, use the less powerful Perplexity, which unlike ChatGPT, can search the internet.

Use it to:

Identify throughlines

Categorize content

Match content to personas and stages

Validate messages with sales, customers, etc.

Ask it to identify missing content

Ask it to generate interview questions that’d fill content gaps

9. Program planning and budgeting

Sometimes you prompt the AI. Sometimes the AI prompts you. We’ve found the Google Chrome plugin AIRPM injects reasonably useful templates into ChatGPT that allow you to produce tabular budgets and the like, which you can then copy and customize.

Use it to:

Design digital marketing channels and campaign

Budget (account-based budget or a demand-gen budget)

Leverage AI to provide you with a ‘conservative’ breakdown vs. aggressive for your resource planning. Leverage AI to format your data into an “ABM lens” to view budget by micro-cluster of accounts (100 accounts) for 1:few or 1:1 for strategic accounts

Build customer profiles and channel recs

Summarize trends into recommendations

Identify white space customer opps

Nurture (select and send messages)

AI is an admirable marketing assistant, for now

It won’t be replacing us anytime soon, we can say that. It cannot be trusted to write customer-facing things. Its infinite creation is limited by our finite ability to supervise it. But you can try the eight applications above and find some reasonable time savings or added efficiency. Especially if you’re at a startup where it’s just you, and having an app red team your decisions, or you’re at a very large company where there’s no time to get anything done in between all the meetings.

What we are very excited for—and what we’ll update this article with, as we discover it—are applications that allow the best AI models to interface with all the software we use at work, so we needn’t do all the gathering and formatting. When it’s a bit more versatile—when its “content audit” actually spans all our channels and digs up items we hadn’t considered content but which play a key role in the buyer’s journey—we’re onto something interesting. And when it can tell an accurate story around our ABM funnel that saves us all the research and tells us exactly how to act, that’s when we’re entered a new era—where our 1,000 unfeeling interns are maybe due a small promotion.

Thanks to Clarissa Kupfer and Amanda Tennant for graphics, Carina Rampelt, Sarah Bellstedt, and Donnique Williams for edits and research, Melissa Bell for a LinkedIn comment that inspired the "delegate your dislikes" bit, Michael Tran at Grammarly for a stimulating conversation, and Orbit Media Studios for their pioneering writing on this topic.